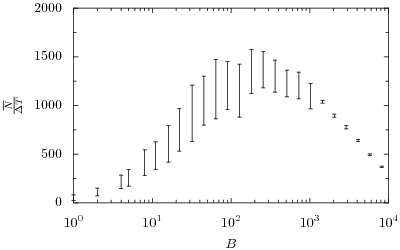

This is a plot of the amount of documents created in a bulk update at the same time against the average amount of documents created per second it yields.

Tag: couchdb

Benchmarking CouchDB (1)

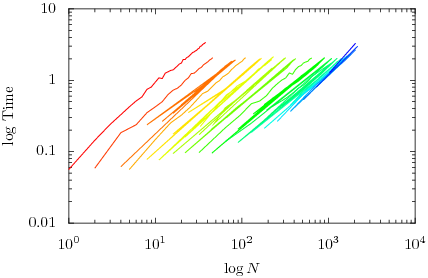

I’ve written a small benchmark for couchdb to test it’s document creation performance. A script creates [tex]$N$[/tex] documents in total using bulk update to create [tex]$B$[/tex] at the same time with [tex]$T$[/tex] concurrent threads. The following graph show the time it takes to create an amount of documents against that amount of document for different values of [tex]$B$[/tex] with [tex]$T=1$[/tex].

And for [tex]$T=2[/tex] (two concurrent threads. Tested on a dual core machine)

The values of B are 1, 2, 4, 5, 8, 11, 16, 22, 32, 45, 64, 90, 128, 181, 256, 362, 512, 724 and 1024

As you can see, a higher value of [tex]$B$[/tex] causes the graph to shift to the right which means more [tex]$N[/tex] for the same time. Bulk update really does make a difference. Or non-bulk-update really sucks. Also adding threads does help a bit, but not as much as expected.

There are some more interesting graphs to plot ([tex]$B$[/tex] against [tex]$\overline {N \over \Delta T} $[/tex]). More graphs tomorrow.

(For those interested, the raw data from which these graphs were plotted.)

CouchDB document creation performance

CouchDB is a non-relational database which uses MapReduce inspired views to query data. There are lots of cool things to tell about its design, but I rather want to talk about its performance.

Today I’ve been busy hacking together a little script to import all e-mails of a long e-mail thread into a couchdb database to write views to extract all kinds of statistics. I already imported these e-mails into a MySQL database a few months ago, but was quite disappointed by the (performance) limitations of SQL. The e-mail thread contains over 20,000 messages which weren’t a real problem for MySQL. When importing, however, couchdb was adding them at a rate of only a few dozen per second with a lot of (seek)noise of my HDD.

So I decided to do a simple benchmark. First of, a simple script (ser.py) that adds empty documents sequentially. It’s averaging 16 per second. It occurred to me that couchdb waits for a fsync before sending a response and that asynchronously the performance would be way better. A simple modification to the script later (par.py) it still averaged 16 creations per second.

I guess, for I haven’t yet figured out how to let straces tell me, that it’s the fsync after each object creation which causes the mess. couchdb itself doesn’t write or seek a lot, but my journaling filesystem (XFS) does on a fsync.

Can anyone test it on a different filesystem?

Update Around 17/sec with reiserfs.

Update I had some trouble with the bulk update feature. I switched from svn to the 0.7.2 release. I got about 600/sec, which dropped to a steady-ish 350/sec when using sequential bulkupdates of 100 docs. Two bulk updates in parallel yield about 950/sec initially, dropping to 550/sec after a while. Three parallel updates yield similar performance.