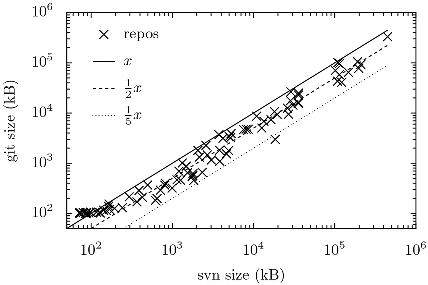

At Codeyard we maintain a git and a subversion repository (which are synced with each other) for each of the >115 projects. The following graph shows the repositories plotted logarithmically according to the size of their whole server side subversion repository horizontally and their git repository size vertically:

To make more sense of the logarithmic nature of the graph, I’ve added three lines. The first (solid black) indicates the points of which both sizes are equal. The second course dashed line indicates the points of which the subversion repository is twice as large as the git repository. And lastly, the third finely dashed line indicates the points of which the subversion repository is five times as large as the git repository.

All projects for which git is less storage efficient, are smaller than 100Kb. The projects for which git is most storage efficient (up to even 6 times for a certain C# project), are all of medium size (10–100MB) and code-heavy. For the other projects, which are blob heavy (eg. images), git and subversion are close (git beats svn by ~20%).

One notable disadvantage of huge (someone committed a livecd image) git repositories, is an apparent [tex]\geq2N[/tex] memory usage of git repack even if I tell it not to with --window-memory.